Jump to Section

What is a Data Warehouse?

A Data Warehouse is a collective bunch of data of the corporate world and these retrieve data from the external source and other operational systems.

It handles the enterprise’s daily transaction operations, which helps in making the business decision due to reporting and analysis tools at different levels. Furthermore, data is inserted into the warehouse database through the ETL process (data extraction, data transformation, and data loading ) by using OLTP applications like external providers and mainframe applications, etc.

The whole concept of DW was established in the 1980s by IBM employees “Paul Murphy and Barry Devlin”. They formally enhanced “Business Data Warehouse”. Therefore, this concept is specially formed to provide the data flow from external operational programs to make the better decision for organizations perspective.

Basically, a Data warehouse provides consolidated data, transactional data, and historical data from different sources. Data warehouse segregates the workload analysis and enhances the organization data from other external links.

Why the Need for a Data Warehouse?

A data warehouse is a domain system of OLTP (Online transaction processing). So, data can be stored in the data warehouse through a data warehouse appliance for months and years to make the historical analysis.

A warehouse appliance is a mixture of software and hardware tools. Therefore, these tools store the data in the database of a data warehouse. Basically, the storage capacity of a data warehouse comes around in terabytes and even petabytes.

So, let’s understand the practical meaning of data warehousing with an example. So, let’s assume a big organization stores data (information) related to its employees, their date of joining, date of leaving, their salaries, product estimation, client and customer information, invoices and sales, etc. Furthermore, the head of an organization may want any information related to the measurement of cost-reduction. The answer will rely on historical analysis of the data. This is the main advantage of data warehousing.

Business Analysis Framework

This framework tells the actual working of a data warehouse. A (Business Analyst) BA retrieves valuable information from the DW (Data Warehouse). Further, it identifies the performance and makes a crucial decision to win with other market stakeholders.

For this reason, a data warehouse increases the organization’s productivity. It also provides a consistent workflow to make the relationship strong with customers.

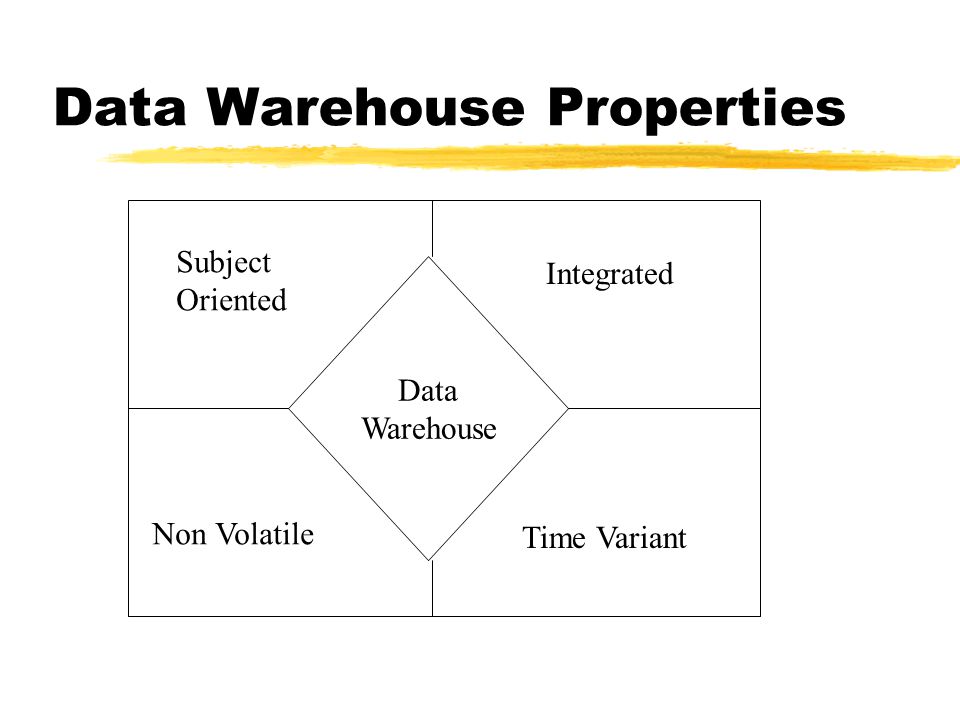

Properties: Data warehouse

There are some features of the data warehouse as follows.

Integrated

It consolidates the data from external sources like relational DBMS, extended DBMS, and Flat files, etc. It also provides effective data analysis in the data warehouse.

Subject Oriented

This is the main feature of the data warehouse. Data warehousing is a subject-oriented system because it provides the data of a subject instead of the company’s ongoing operations. These subjects can be product, branch information, sales, and revenue, etc.

A simple data warehousing does not require any ongoing operations. It also provides data analysis and modeling for decision making.

Non-Volatile

In this situation, old data is not deleted while the new record is added to the database. Old data is merged with new data by using SQL JOINS query. A data warehouse is apart from the external operational sources that do not reflect in the database of the data warehouse.

Time-Variant

Extracted or collected data is analyzed for a particular period of time. Here, data gives results in historical form.

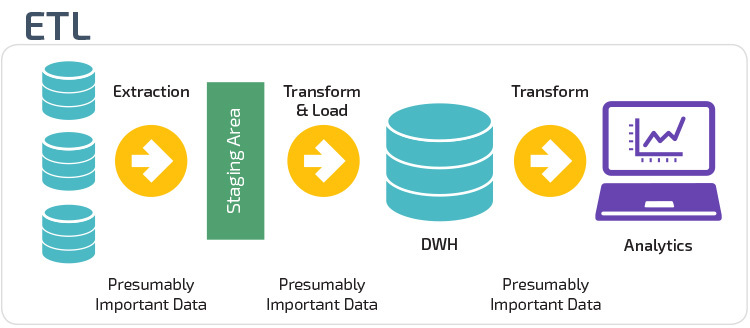

ETL Process in Data Warehouse

ETL is a combination structure of extraction of data, the transformation of data, and the loading of data. These ETL processes help in the preparation of data in database management. It also extracts data from external sources, then transforms the data by using concatenation, data aggregation, and calculation rules.

Later, it loads the information in the data warehouse. As a result, it simply analyzes business data to make a crucial business decision. So, let us understand the ETL process flow in brief as follows.

Extraction

The extraction process reads the data from an unknown external database and extracts it in the desired form to make the data easily accessible for business objectives. On another hand, the major point to extract data is to fetch the relevant information from the system with minimum resources. As a result, this step does not affect the data.

Transformation

This function work like filtration and data cleaning. This step is applied to some important lookup rules and formulas on extracted data as per the business requirements. Then, it converts the data into the desired table or a database. Therefore, this phase of ETL contains a verification, validation, and rejection of data. First of all, it analyzes the extracted data through verification that defines whether the extracted data is in the correct format or not.

Secondly, It validates the extracted data, which means making sure that data is extracted correctly. Here, you can use SQL during the validation process. SQL will help you find the correct data. You can even find duplicate data, sorting of data, data conversion, and consistency of the data in the database.

After the validation process, the query result will give you the final data. Now, apply a set of rules to get duplicate and unwanted data. As a result, it automatically will reject the data. This is how the transformation process works.

Loading

This is the final stage of the ETL process. This phase defines the results of data extraction and transformation. After the two steps of EPL, the data then loads into the database of the data warehouse. You can also take the help of the SELECT command while data is loading into the database. As a result, once you load the data, the database refreshes.

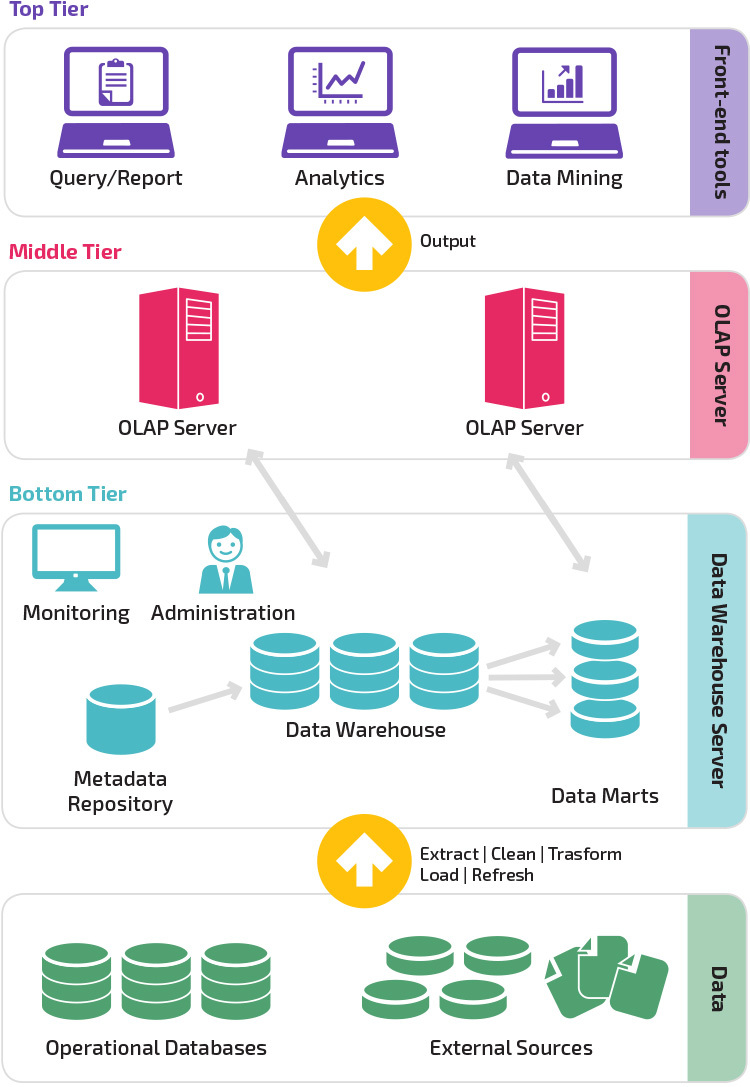

Data Warehouse Architecture Model (Three-Tier Architecture)

A data warehouse is an RDBMS (Relational Database Management System), that is well developed for query analysis. A data warehouse maintains huge data in the database to make the changes in historical analysis. In this session, I will discuss the three-tier architecture model of the data warehouse. In conclusion, this three-tier architecture contains three layers as follows.

- Bottom-Tier Layer

- Middle-Tier Layer

- Top-Tier Layer

Bottom-Tier Layer

The bottom tier layer contains all of the database servers in the data warehouse which relates to the relational database system. Data is extracted from external and operational databases with the help of a backend tool.

Then, we can feed data into the database of the warehouse by performing the ETL process (Extract, Transform, and Loading). Basically, we use the ETL process to load data into the bottom layer of the data warehouse architecture model. In addition, the bottom layer contains several entities like:

- Data warehouse

- Data Marts

- Metadata Repository

- Monitoring & Administration

1. Data Warehouse

A data warehouse is a sophisticated form of operational database that contains only the required information and allows to get fast access to the information.

2. Data Marts

Data Mart is an optimized version of the data warehouse which relies on a particular subject area. A data mart maintains an access layer to collect the relevant information from the organizational data store.

Therefore, data marts only focus on specific business architecture to build the warehouse in a large size that takes less time and generate organization revenue. Basically, it divides the data into small portions like sales information, customer information, and items information. In addition, some key points to remember about data mart are as follows:

- Data Marts are Windows, Unix, and Linux based servers that create data marts. Creating data marts is cost-effective.

- Implementation of data marts cycles cannot be stored for a long time means it is only valid for weeks not for months and years.

- Data marts are much smaller in size but more flexible. Data marts can customize the data by their departments.

- Because of the user understanding, the data mart requires less implementation as compared to the data warehouse. As a result, data mart can improve the business process.

Types: Data Mart

Data marts contain three types as follows

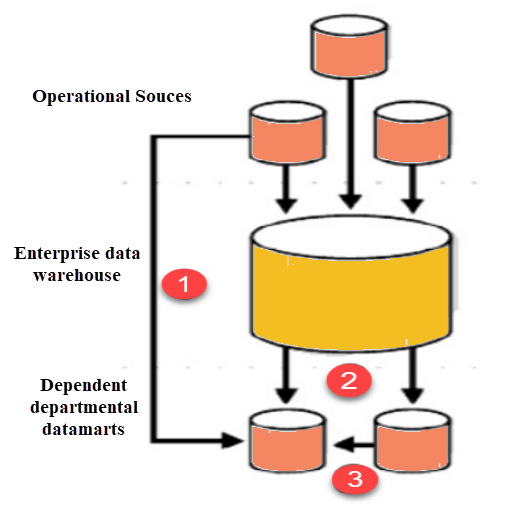

- Dependent data mart directly sourced from the database of a data warehouse.

- Independent data mart sourced with more than one data warehouse.

- Hybrid data mart converts the data from the data warehouse to an operational database.

So, let’s understand the data marts types in brief.

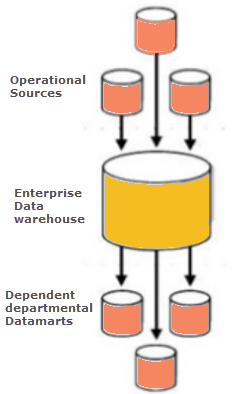

Dependent Data Mart

Dependent data mart accesses data sources directly from a single data warehouse database. This type of data mart depends only on an enterprise data warehouse or centralized data warehouse. You can access only one database at a time. But, if you want more than one, then you have to individually configure them.

The dependent data mart provides a way for end-users to easily access data warehouses and data marts together, but it will depend on the need. As a result, this data mart works from top to bottom as seen in the image above.

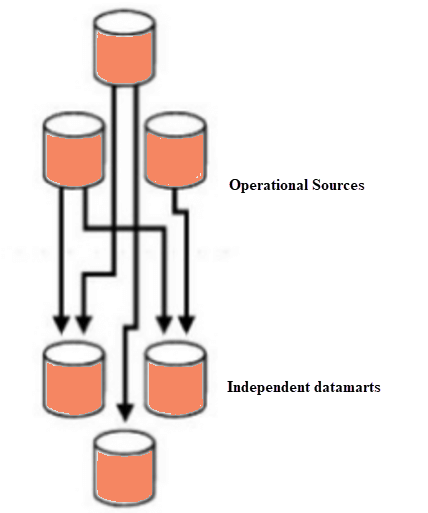

Independent Data Mart

This type of data mart works without the use of any centralized system or data enterprise warehouse. Independent data mart divides the data into small portion within an organization.

Therefore, independent data mart does not contain any relation value with data enterprise warehouse and other operational data marts. Hence, it works independently from the operational system. For this reason, you need to develop a new centralized store where an end-user can analyze the data multiple times.

Hybrid Data Mart

A hybrid data mart is a mixture of multiple inputs from external sources in the data warehouse. Basically, it works in the ad-hoc environment. It is designed to work on more than a database and also provide the fastest implementation for any big organization. It requires less cleaning process and supports a big database. Hybrid data mart contains more flexibility than dependent and independent data marts for small data applications.

The Implementation Process of Data Mart

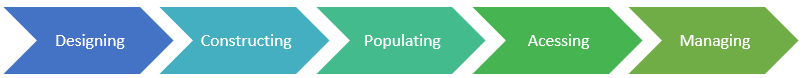

A data mart contains five implementation process as follows.

- Designing

- Constructing

- Populating

- Accessing

- Managing

Designing

Designing is the 1st phase of implementation in the data mart. It covers all actions between the initial request of data mart to collect data through the basic data mart requirements. It also discovers both the physical & logical model of the data mart. In addition, this phase contains some tasks as follows.

- Collect the required technical and business requirement.

- It verifies the source of data and selects the proper subset of data.

- It helps in making the physical and logical architecture of the data mart.

Constructing

Constructing is the 2nd phase of datamart, it creates the logical and physical architecture of the data mart that provides fast access to data. In addition, this phase contains some tasks as follows.

- An RDBMS stores the constructed data which contains the addition of the data & deletion of the data.

- By using a SQL query, you can perform several functions like indexes, views, and tables.

- Constructed data remains secure by using SQL security libraries.

Populating

This is the third phase of data mart process, that covers all the steps which are used in the above two phases of data mart like getting the data from the external source, cleaning of the data and modifying of the data. In addition, this phase contains some tasks as follows.

- Select the target sources for getting the data.

- Extraction of data from the sources.

- Data cleaning and data transformation.

- Loading and storing the data (metadata) into the database.

Accessing

This is the 4th phase of data mart implementation, this phase makes the data usable for data analysis, querying the data, creating the chart, graphs, and reports to publish them into the external market for business objectives. Furthermore, an end-user submits the set of queries and display the final result of the queries.

Managing

This is the fifth, and last, phase of data mart implementation, that covers all of the tasks which relate to management like:

- Providing user access authentication.

- Providing secure, clean, and accurate data to the data warehouse.

- Optimizing the system for better results and performance.

- Providing the backup in case of system failure.

3. Metadata Repository

Metadata is another form of simple data.

A simple data which represent different data format call as metadata. Metadata segregates the especially relevant information of the data. It also provides detailed information on it. So, let’s assume an index of the notebook behaves as metadata for the internal content of the notebook. It also defines the warehouse objects and roadmap in the data warehouse.

Now, we must understand the metadata repository, which is an internal part of the data warehouse system. It contains some metadata categories as follows.

Business Metadata: This type of metadata covers business information like business goals, owner information, changing policies information and business definition, etc.

Operational Metadata: It contains a lineage of data and data currency. Data currency defines the active mode of data, purged, and archived. Data lineage refers to the data transformation and migration rules.

Mapping of data from the operating system to Data warehouse: This type of metadata contains sources database, the content of the data sources, extraction of data, data partition, cleaning of data, the transformation of data, purging of data, and refreshing of data rules.

Summarization Algorithms: It contains data aggregation, dimension algorithms, data granularity, and data summarization.

4. Monitoring & Administration

This element is basically used for data refreshment and disaster recovery. It set the limitation in the size of the data warehouse and provides data in synchronization. Further, it controls the ranges of query and numbers format. It helps to increase the performance better of the database.

Middle-Tier Layer

In the middle-tier, there is an OLAP server that works as a single processing combination of ROLAP and MOLAP database systems. This OLAP server provides the multidimensional way to get the data model. For this reason, analysts and managers can provide the consistent and fastest way to get the information.

ROLAP stands for Relational On-Line Analytical Process that manipulate the stored data in the relational database. ROLAP model can easily maintain large data. MOLAP stands for Multidimensional OLAP system that maintains dynamic multidimensional operations and the data. Data can be stored in the multidimensional cube. It provides the fastest data retrieval due to MOLAP cubes. The whole structure of the middle layer is to represent the abstracted view of the data warehouse. As a result, this layer contains common behavior between database and end-user.

Components of OLAP Server

There are three components in the OLAP system as follows.

- Relational OLAP

- Multidimensional OLAP

- Hybrid OLAP

Relational OLAP

Basically, ROLAP works on the stored data in the relational database. ROLAP server defines the relationship between front-end(client) tools and relational back-end tools. ROLAP server provides the way to manage and store the data by using relational and extended DBMS. Therefore, this server can easily handle a large number of data. But, it contains a drawback that it has some limited SQL functionalities to maintain the database and aggregate tables.

Multidimensional OLAP

Multidimensional OLAP system handles the multidimensional data through array-based storage systems. It also provides an optimal solution for dice and slice operations. MOLAP performs the fastest access of the data in comparison with relational OLAP. It can also handle complex calculations. But, it contains some drawbacks like data aggregation cannot perform while changing the dimension of cubes. MOLAP maintains a small number of data.

Hybrid OLAP

Hybrid is a complete mixture of MOLAP & ROLAP servers. A hybrid server provides the fastest accessibility than MOLAP & ROLAP servers. It contains better scalability and computation. It also maintains a big number of data (More than a terabyte).

Note: OLAP contains an additional type as “Specialized SQL servers”, that allows to execute advanced functions of SQL query and provide better processing support than Snowflake and Star schemas.

So, let’s look at the difference between OLAP and OLTP. This is the most important part of the data warehouse, which could be asked in the interviews for data warehousing profile.

OLAP vs OLTP

|

OLAP |

OLTP |

|

On-line Analytical Processing system |

On-line Transaction Processing System |

|

It contains a functionality of query management. |

OLTP contains a functionality of modifying the database. |

|

It performs single modification due to only SELECT command. |

This performs several modifications due to INSERT, UPDATE & DELETE |

|

OLAP use only data warehousing process |

It uses only extended DBMS |

|

OLAP provides data analysis and retrieval of the data. |

OLTP provides data manipulation and modification. |

|

Tables do not perform data normalization |

Tables perform data normalization |

|

It creates seconds to minutes response time |

It creates in a millisecond. |

|

Data is not organized in the database |

Data is organized in the database. |

|

It contains the read-only process. |

It contains both read and write process. |

|

OLAP belongs to customer oriented system. |

OLTP belongs to the market-oriented system. |

|

It solves complex query due to data aggregation rules. |

Here, the query is simple and standardized form. |

|

OLAP requires back up time to time but is not mandatory. |

It requires full back up. |

|

It is subject oriented. Example: DB changes with the subject like marketing, purchase, and sale etc. |

It is application oriented. Example: Retails, Airline, and banking etc. |

|

OLAP is used by only Users like Managers, CEO, MD etc. |

OLTP is used by Database professionals and DBA. |

|

Hundreds of users can perform actions in OLAP |

Thousands of users can perform actions in OLTP. |

|

OLAP help to increase business productivity. |

It helps in user’s productivity, |

|

A user can create the view by using spreadsheets |

It can easily maintain the view. |

|

It provides historical data processing |

OLTP provides day to day data processing |

|

It mainly focuses on Data out. |

It mainly focuses on Data In. |

|

Totaly based on Snowflake and Star Schema. |

Purely based on ER Model (Entity Relationship Model) |

Top-Tier Layer

Top-Tier is a front-end client-side layer in a three-tier model of the data warehouse. This contains reporting & query tools, data mining & data analysis tools. Here, reporting tools contain report writers & productive reporting tools. Analysis tools are also used to make charts based on data mining results. Data mining tools define especially relevant information from the hidden patterns. Now, we have completed the three-architecture model of the data warehouse.

Conclusion

I hope you guys enjoyed this tour of the data warehouse. A simple data warehouse is a hub where the especially relevant information resides in the database. There are many databases for storing the value but I explained the relational and extended database management system. Because they both contain maximum data storage than other databases. This is a complete tour from definition to its conclusion. In addition, this article will help to learn what exactly a data warehouse is, and why is this so important.

I also explained the whole concept of the data warehouse from scratch which will help to learn for freshers in their interviews and the exams. In the data warehouse architecture, metadata plays a vital role as it species the values, the point of connection, source, features, and usage of the data warehouse. In addition, last but not least, the main topic of this article is the difference between OLAP and OLTP. So, most of the companies who hire a candidate or employee for data warehousing profile must ask these differences.

Thank you!

- Business Intelligence Vs Data Analytics: What’s the Difference? - December 10, 2020

- Effective Ways Data Analytics Helps Improve Business Growth - July 28, 2020

- How the Automotive Industry is Benefitting From Web Scraping - July 23, 2020