Jump to Section

Defect Management System

At the time of software testing, we may detect a few mismatches/deviations from the requirement. Now, it’s time to discuss the ways to report that mismatch (Defect) and track it using appropriate mechanisms. Usually, people may think that this task is the easiest of all, compared to designing a test case and executing it. However, it is very difficult to tell the world that the raised defect (mismatch) is worthwhile to share it with the development team who can rectify it. After discussing, therefore, this needs proper reporting and tracking mechanisms.

Purpose

It is advisable to report a bug as soon as possible. This is because the earlier you find a bug, the more time that remains on the schedule you may fix the issue. One needs to effectively describe the bugs. Suppose you were a programmer and received the following bug report from a tester. “Whenever I type a bunch of random characters in the login box, the software starts to do weird stuff.” How would you even begin to fix this bug without knowing what the random characters are, how big a bunch is, and what kind of weird stuff was happening? So, a test engineer must clearly specify what the bug exactly is?

Defect Report Format

During test execution, test engineers are required to report defects identified to the development team in a defect report format.

(By tester)

1. Defect id: Unique number or name

2. Description: Summary of the defect

3. Build version id: The version number of the build in which test engineer found this defect.

4. Feature: The name of module or functionality, in which the test engineer found this defect.

5. Test case title: The title of the failed test case.

6. Reproducible: Yes/No (Yes if the defect appears every time during test execution.)

7. If Yes: Attach test procedure or script.

8. If NO: Attach strong reasons and snapshot

9. Severity: The Severity of the defect with respect to the functionality.

10. Priority: The importance of defect in terms of consumer [high, medium, low]

11. Status: New / Reopen

12. Reported By: Name of the test Engineer

13. Reported On: Date of Reporting

14. Assigned To: The idea of responsible person to receive this defect report.

15. Suggested Fix: Suggestion to accept and resolve this mismatch.

(By Developers)

16. Fixed By: Project manager or team leader

17. Resolved By: Name of programmer

18. Resolution Type: Accepted, Rejected, or postponed.

19. Resolved on: Date of solving

20. Approved By: Signature of project manager.

Defect Submission

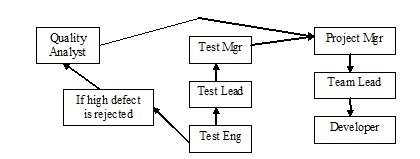

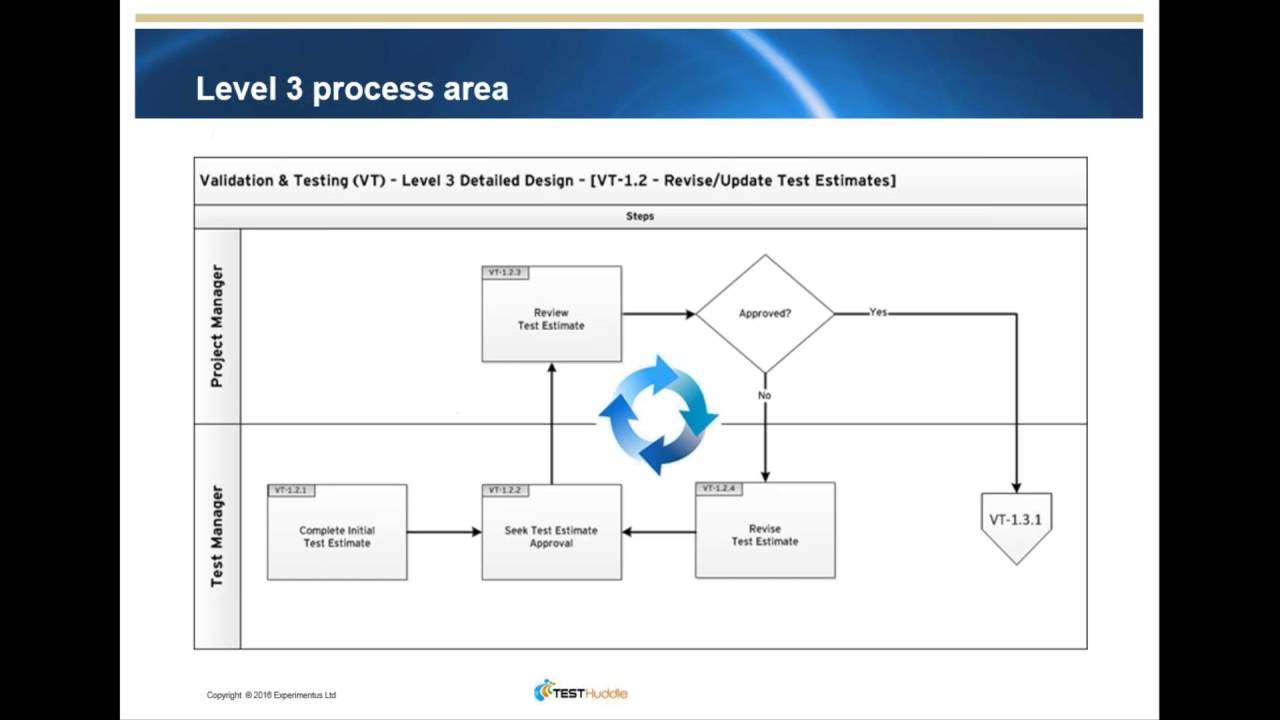

After documenting a defect in the prescribed format as above it is necessary to communicate the point of contact, who will, in turn, send these defects profiles to the development team to resolve defects. Here, we would like to present the usual way of defect report submission process. So, it definitely differs from an MNC to a small-scale organization based on the availability of resources. The process of defect submission for the large-scale organization is showing in the figure.

The defect submission process for medium and small-scale organizations is shown in above figure, The project manager acts as the quality analyst. Hence, he will take care of defect reporting, i.e. whether it is accepted or rejected.

DEFECT LIFE CYCLE

We know very well that each person passes several stages in his lifetime. So, his stages are made up of acts, like birth, schoolboy, lover, soldier, judge, aged and old. He enters into this world by birth and exists in the form of death. Interestingly, we can also attribute a similar life cycle to a Defect. Here, on this topic, we are going to discuss various stages of a defect- from its birth to its death.

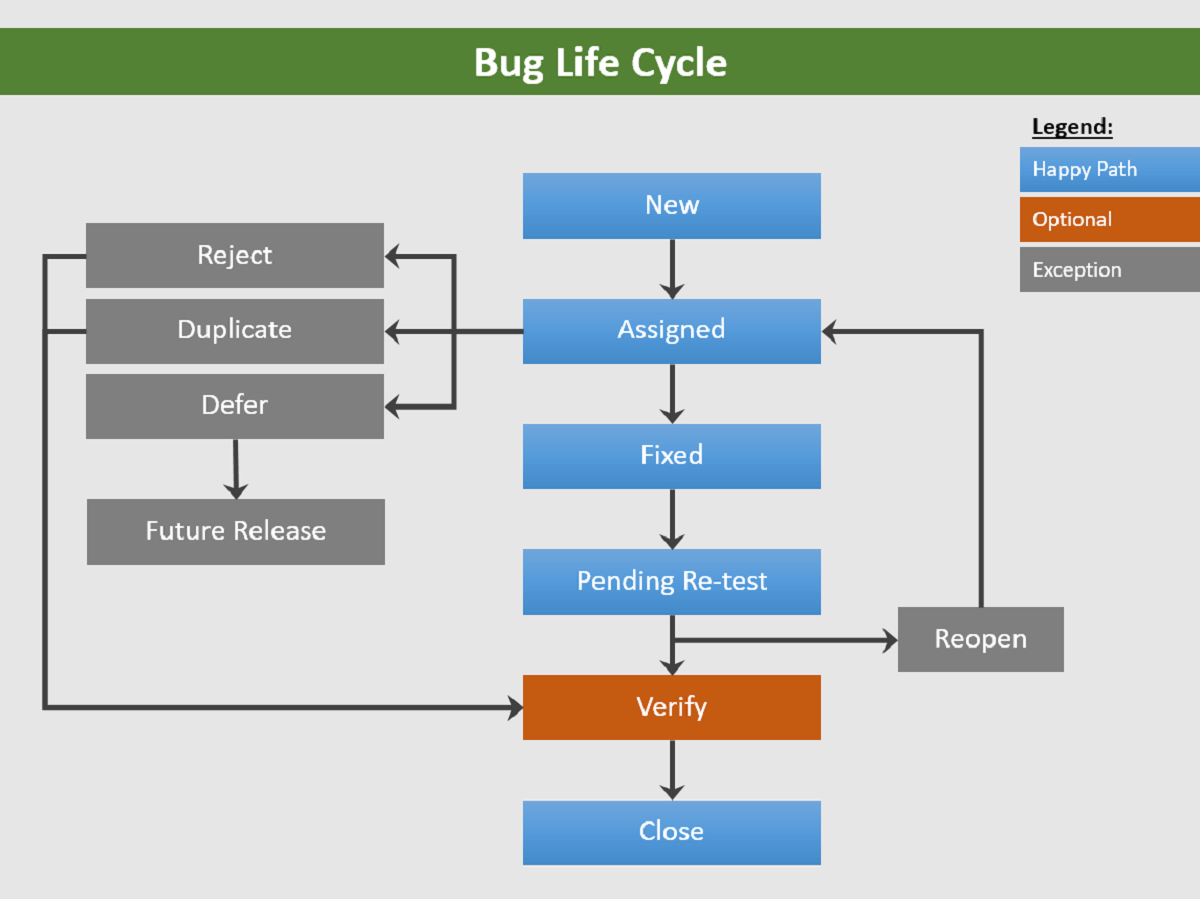

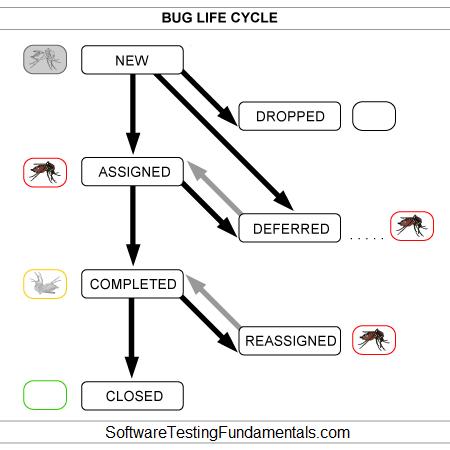

As you know when you detect a mismatch in an application, the test engineer fills the defect profile document with its complete description and sends it to the development through his her point of contact. As and when the testing team detects a defect, they assign ‘New’ status to the defect. Then based on its resolution type, the development team fixes the status as ‘Open/Born’ (if they formally accept as a defect), ‘Rejected’ (If they are not convinced that it is a defect) or ‘Differed’.

The status ‘differed’ says that the defect differs when the developer has a strong reason to reject, As the defect is of low quality. After solving this issue in the next version of the build and even the testing team also accepts the rejection. Now they can be agreed upon defects should close or respond based on their existence in the next version of the build. Here, we will summarize five possible paths of bug reporting throughout its life cycle.

- New->Open->Resolve->Closed

- New->Reject->Closed

- New->Reject->Reopen->Resolve->Closed

- New->Differed

- New->Open->Resolve->Reopen->Closed

Sometimes the community shows the defect life cycle in this format.

Defect resolution type

After receiving the defect report from the testing team, the responsible development people conduct a review meeting to fix these defects. Then they send a resolution type to the testing team for the further communication.

Here we will discuss the 12 type of resolutions, classifieds as follows:

1.Duplicate- Rejected, as this defect is same as a previously accepted defect.

2.Enhancement- Rejected as this defect related to future requirements of the customer.

3.Hardware limitations- Rejected as this defect is raised with respect to limitations of the hardware device.

4.Software limitations- Rejected as this defect is raised with respect to limitations of software technologies.

5.Need more information- Neither accepted nor rejected but developer requires more information to understand this defect.

6.Not reproducible: Neither accepted nor rejected but developer require correct test procedure this defect at the developer site, i.e. the defect is shown by the tester is not reflected in the developer system, Hence asking for the correct procedure.

7.No plan to fix it: Neither accepted nor rejected but developers require some time as they are resolving other bugs.

8.Fixed: Accepted and ready to resolve

9.Fixed indirectly: Accepted but postponed to a future version (differed)

10.User Direction: Neither accepted nor rejected but developers are adding a message to the screen about the defect.

11.Functions as designed: Rejected, as coding is correct with respect to the design document, i.e. HLD’s and LLD’s.

12.Not Applicable: Rejected due to the improper meaning of the defect.

Types Of Defect

Generally, during functional and system testing, test engineers find 10 types of bugs, as shown in the following table:

| BUG | Examples |

| User Interface bugs(low severity) | Spelling Mistake-high priority but low severity |

| Input domain bugs(medium severity as disturbing functionalities) | Do not allow valid type age as it does not take numeric values-High priority |

| Allow invalid type-(Low priority ) | |

| Error handling bugs(medium severity) | Do not return error message-(High priority, medium severity) |

| Error message with complex meaning-(Low priority) | |

| Calculations Bugs(High severity, show stopper) | Dependents output are the wrong-high priority(stopping all the modules) |

| The final output is the wrong-low priority (stopping only that module) | |

| Control flow bugs(high severity) | Race condition(hanged/deadlock)-High priority,High severity |

| Load condition Bugs(high severity) | Do not allow multiple users(high priority, high severity) |

| Hardware Bugs (High severity) | Our application is unable to activate device-(High priority, high severity) |

| Version Control Bugs (Medium Severity) | Difference between in two consecutive build versions, i.e. blind mistake. |

| ID control Bugs(Medium Severity) | Version Number missing, Wrong Version Number, logo missing, wrong logo. |

| Source Bugs(Medium Severity) | Compulsory to resolve before releasing mistakes in helping documents |

DEFECT TRACKING SYSTEM-

Basically, The primary objective of software testing is to uncover the defect/issue/problems in the application. A well-defined defect management process needs to be established to manage the defects properly and effectively. Various tools are available in the market today to address the requirements of the defect management process, e.g. Buggit, Rational Clear Quest, PVCS, Silk RADAR, etc. These tools provide the capability of customizing the defect management process. Besides, some organizations use various developed tools for defect management as well. The key idea is to have a well-defined defect management process by using a tool, which matches the organization requirements. The defect management tool also provides the capabilities of addressing the escalation requirement for the defects.

Test Closure

After executing all the possible test cases and resolving corresponding bugs, test load conducts a review meeting along with the test engineers. So, in this review meeting, the test load, depending on the following factors, verifies whether the testing team has met the exit criteria or not.

Factor 1: Coverage analysis

It basically includes two type of coverages :

- Requirement based coverage

- Test Requirement Matrix based coverages

Factor 2: Bug Density

This can be illustrated by the example given as a scenario, after performing a complete system testing of all the modules (here A, B, C, D) of the application.

The following table list the corresponding bug percentage in the respective modules:

-

Module Number of Bugs(%) A 20% B 20% C 40% D 20%

Here, in this scenario, the module having very high bug density(defined in the percentage of bugs) needs to be regressed. Test load instructs the testing team to conduct postmortem testing on module C because that module raised more percentage of bugs in the preceding scenario.

Factor 3: Analysis of deferred bugs

Hence, the discussion is based on the question, ‘Whether the deferred bugs are postponable or not’. Finally, after conducting a successful review, the testing team concentrates on Level-3 testing/Real regression testing/postmortem testing.

After completion of Level-3 testing, the test management concentrates on user acceptance testing.

User Acceptance Testing

Before delivering the application/product to the customer, the final level of testing to be done is User Acceptance testing(UAT). Here the test customer site people or model customers involved in giving feedback on an application build before release.

There are also two ways to conduct user acceptance testing-Alpha Testing and Beta Testing.

1.Alpha Testing

While using it we test of an application when development is nearly complete. Minor design changes can still be made as result of alpha testing. In Alpha testing, In-House developers and test engineers form an independent team along with customer site representative.

2.Beta Testing

In this type of Testing, we test an application when development and testing are essentially complete, and we need to find final defect and problems before the final release.Basically, End-Users, non-programmers, software engineers, testing engineers or others, typically perform beta testing.

Sign Off

Finally, After completing user acceptance testing and their modifications, the best lead prepares the final test summary report. So, This report consists of the corresponding project test strategy, system test plan, test case titles, test case documents, requirement traceability matrix, automation programmes, test logs, and defect reports.

This final test summary report is a part of the SWRN(SoftWare Release Note) as a result.

Conclusion

Last but not the least I just want to add, This is one and the only type of checking the definition of a defect/Bug/Error, it is not fully correct. If a tester comes across something in a system that deviates from expected behavior, then it does not necessarily mean that this is a defect. Last but not the least, we provide various points, the user needs to consider while selecting a Bug and Issue Tracking Software for the organization. In Conclusion, evaluators can use this information to evaluate multiple Web Bug Tracker systems that are available in the market.

- COVID-19: How We Are Dealing With It as a Company - March 23, 2020

- Agile Testing – The Only Way to Develop Quality Software - February 8, 2019

- How to Perform System Testing Using Various Types Techniques - May 16, 2018