What is Scrapy?

Scrapy is a web-based crawling framework. This framework is written in Python language. Basically, it is used for scraping a website and website can be either static or dynamic. Scrapy is the leading web scraping frameworks in the meantime of 2016-2018. It was developed by “ScrapingHub.Ltd”. In this article, I will discuss why it is important and how it works in web scraping.

Jump to Section

Why use Scrapy?

Scrapy is a free open source web framework. Scrapy simply crawls the websites and extract the relevant data from the websites. It is a leading software development company. It is the only framework who extracts data with the help of APIs. Scrapy gives full authentication to extract the data of your choice, later process and saves them in a specific location. It is a really fast and robust framework which comes under BSD licensed. There are some mixtures of benefits as follows.

Features of Scrapy

There are a lot of features of scrapy as follows.

- Scrapy is fast and you can crawl a large number of a project by using scrapy.

- It contains inbuilt function is known as Selectors, by using selectors you can easily extract the data from different websites at the same time.

- It always solves the conditions asynchronously

- While extracting data, it automatically changes the crawling speed with the help of throttling function.

- It has one amazing Python library which is known as “urllib2“. This Python library defines the function and classes, which really help in opening the main URL in complex browsers. This tool was very popular before launched of Requests.

- BeautifulSoup is a Python package. It is parsed from the XML and HTML documents to explore the screen mapping and data extraction from the downloaded web pages.

- Mechanize is a Python module which is used to navigate the web pages and interacts with the website.

- Scrapy exports data in several formats like CSV, XML, and JSON.

- Scrapy is also can be used to export structured data which further can be used for a wide range of web-based dynamic applications.

- It is a multi-platform application based framework like Mac OS, Windows, Linux, and BSD etc.

- It contains another amazing inbuilt library called “Scrapyd” that gives authentication to upload your project and make control over JSON web service.

What is Selenium?

Selenium is a web application based framework. Basically, it is only designed for automating testing for dynamic web applications across the multiple browser platforms. It is a browser web automation tool. It gives you permission to open and close a browser of your choice and it can perform a task as a normal human brain can do like as click on the buttons, Enter data into the forms, specific research on the webpage etc.

Why use Selenium?

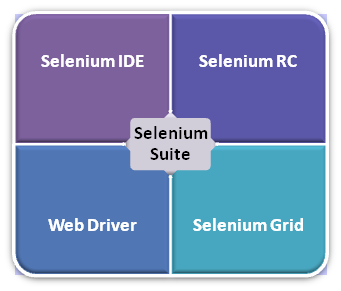

Selenium is a totally free and open source application framework tool. You can even use Selenium for commercial purpose. You can also use Selenium for small web applications which required less line of code and the database. Selenium fully supports all mobile application and its devices as well. It is quite easy to use and learn. You can write many test cases in a number of other programming languages like C++, C#, Java, Ruby, Python etc. These test cases can be run by these web browsers like Chrome, Firefox, and IE. It contains 4 components and their features as follows.

- Selenium Integrated Development Environment (IDE)

- Selenium Remote Control (RC)

- WebDriver

- Selenium Grid

Selenium Integrated Development Environment (IDE)

It is a very easy and simple framework of Selenium. It is just like a Firefox plugins that can be easily installed with other plugins in Firefox.

Pros

- IDE does not require any programming language to debug the code.

- It creates test reports modules to provide support for extensions.

- Only design test cases for prototyping tool.

Cons

- Only HTML and DOM session is used in IDE.

- It does not provide iteration and conditional support.

- It contains slow execution service than RC and WebDriver.

Features

There are some features of Selenium IDE as follows

- Selenium is Highly extensible and can be used for commercial purpose.

- Selenium IDE contains amazing standard Selenese commands like Open, Type, verify, assert, and clickAndWait etc.

- It provides locators like name, XPath, CSS selectors, id, class etc.

- Test cases can be exported in various formats.

- You can run the test cases on Firefox only.

Selenium Remote Control (RC)

Selenium RC is a flagship testing application framework that allows end-user to use programming language they want to use. SRC can be used on cross platforms and multiple browsers. RC supports different programming languages like Perl, Ruby, Python, Java, C# etc.

Pros

- It can support data-driven testing & newly updated browsers.

- It contains faster execution service than IDE

- RC can create a loop condition.

- RC can be used on cross platforms and different browsers.

Cons

- RC has a complex installation process than IDE and it requires less programming knowledge.

- To execute the session you will need the RC server.

- It contains redundant and confusing API commands and provides an inconsistent result of the program.

- RC contains slower execution than WebDriver.

Features

- There are some features of Selenium RC as follows

- It supports various OS (operating system) and you can run on any browser.

- You can run test cases against the different web browsers instead of HTMLUnit on a different operating system.

- You can test the web applications with AJAX and new browser that supports JavaScript.

WebDriver

WebDriver provides friendly APIs to the web browsers. These friendly APIs are easy to build and understand. It also makes a stable approach to automation test cases during the execution. WebDriver is far better than Selenium RC and Selenium IDE in many operations.

Pros

- Easy installation process than Selenium IDE and Selenium RC.

- It can directly communicate with the new web browser and provides complete iteration support.

- It does not require any separate component to execute the session like the RC Server.

Cons

- The installation process is very complicated than Selenium IDE.

- It requires moderate programming knowledge.

- It cannot easily understand the behavior of the new browser.

Features

- There are some features of Selenium WebDriver as follows

- You can use the basic level of the programming language like C#, Perl, Java etc to make the program.

- You can execute all test cases on HTMLUnit with different OS (Operating System).

- It can develop customize test output.

Selenium Grid

It is a powerful tool which is used with Selenium IDE to execute the test cases parallelly across the web browser and different machines. You can execute the multiple test cases at the same time.

Pros

- It can save enough time during the execution.

- It contains a fast execution service than other Selenium components.

Features

- There are some features of Selenium Grid as follows

- When your browser is minimized then test cases gets executed easily.

- It also can test both desktop and web applications simultaneously.

- You can also run multiple test cases at a time.

Note: Selenium RC and Selenium WebDriver has now merged as ”SeleniumHQ”

The technical difference – Scrapy and Selenium

It is really difficult to measure the comparison between Scrapy and Selenium, which one is helpful for your project so let’s have a look below.

JavaScript

Both Scrapy & Selenium can work great but you can only choose one for your project. Sometimes, In most of the cases data remains the same after too many “ajax/pjax” requests. If you use Scrapy then, it will make the workflow system complex for data extraction. To overcome this situation, I would recommend using Selenium instead of Scrapy.

Data Size

Before going into the code, you have to estimate the total data size of the extracted data. Scrapy only looks into the URLs of the website. But Selenium handles the whole browser to get the Javascript, CSS, and image files that is why Selenium is quite slower than Scrapy while website crawling. If the data size is in a large format then Scrapy is the best selection because it can save enough time during data extraction.

Extensibility

The real design of Scrapy is well developed. You can develop pipeline and custom middleware to add custom functions. Scrapy can be both ‘flexible’ and ‘robust’. Because of its good architecture and well designed, then you can easily transform one into another spider project. Scrapy pipeline is actually saved the exported data in the database in a quick manner. If your project looks small then use Selenium to maintain your project simply because small projects contain less logic. If project requirement is high such as data pipeline, the proxy then Scrapy will be perfect for this situation.

Best Practices between Selenium and Scrapy

- If you want to scrape more than thousands page then go with scrapy because it works faster than selenium in this situation. If you want to scrape web form page, Javascript page, and submission page then you can go with Selenium.

- Scrapy can store the caching utilities that make the programming better but it quite difficult with Selenium. Scrapy can also swap the arguments on the basis of user request which is quite difficult with Selenium.

- If you want to extract data from UI websites and website automation then you can select Selenium and If you need to scrape the dynamic crawl website then you can go with Scrapy.

Web scraping process difference – Scrapy and Selenium

Both “Scrapy and Selenium” belongs to Python structure. These provide the same result but they have their different process to scrape a website as follows

| S.No | Scrapy | Selenium |

| 1 | Create set up and open a new project | Create set up and open a new project |

| 2 | Import the scrapy Spider into the project | Install Selenium package, Chrome driver and Virtualenv in the new project |

| 3 | Parse the HTML code | Import the required modules |

| 4 | Execute the spider | Select the target website |

| 5 | Crawling on Multiple web pages | Open a website page and create a new instance |

| 6 | Extract the data from the website | Load and interact with the page event |

| 7 | Provide XPath | Make the request by using URLs |

| 8 | Extract Data into Spider | Get the response from the website |

| 9 | Store the data into the database | Extract the data and save into the database |

Conclusion

I hope you guys enjoyed this article, if you are planning to scrape a website with Python then this is very important to understand the difference between Scrapy and Selenium. Because both are the main modules of Python. Scrapy and Selenium, both have a different mechanism, features, and their working structures. This article is only for those people who belong to technical departments because programming has been used in this article. So, might be it would create difficulty in understanding.

So, which would be better Scrapy or Selenium framework? There is no confirmed solution. The solution only depends on the project requirement, if you need custom web scraping service, hire the company. As a result, if the project requirement is small then the choice should be Selenium and the project requirement is high like need programming, web crawling and flexibility in code then your choice should be Scrapy.

However, do write your suggestions or query to me in the comment section.

Thank You for reading!!!

- LinkedIn Scraper | LinkedIn Data Extractor Software Tool - February 22, 2021

- Is Web Scraping Legal? - February 15, 2021

- What Is Data Scraping? - February 10, 2021